AI for GRC

Reimagining GRC: How AI is Solving Capacity and Complexity Challenges

GRC, we have a problem

When it comes to GRC, humans have hit a wall. We simply cannot keep up with the pace of GRC growth with current resources, unless we can dedicate hundreds of people to daily GRC grunt work at incredible costs. For most businesses, there is a growing gap between the GRC team’s capacity and the business's GRC requirements. Several factors are driving that gap:

- Regulatory proliferation. The first taste of this came with GDPR; now, every country has a version of it. Then came the war in Ukraine; now, various jurisdictions are deploying export controls. If those are any indication, we will see various regulations around AI. It seems the EU AI Act is just the beginning.

- Business complexity. Businesses expand globally, with multiple products, different jurisdictions, and a variety of customers. At the same time, the supply chain is becoming more complex as seen during COVID.

- Technology complexity. The cloud era has proliferated complex technology stacks. AI adoption exacerbates the complexity. While other parts of the organization are making changes to keep up (e.g., DevOps is using copilots to significantly increase its velocity), GRC is mostly stuck in manual processes and is becoming the regular bottleneck in production.

- Scalability. GRC teams cannot scale linearly with the scale of the organization. They are already behind. GRC needs a quantitative and qualitative jump - a revolution - in how its teams operate. That must involve people, processes, and technology.

All these are either part of the cause or are the symptoms of a very familiar problem: teams are being asked to do more with less. The way we manage GRC hasn’t kept pace with the scale or complexity of today’s regulatory environment. Teams are overwhelmed, information is scattered, and processes are often rooted in static documents and reactive audits.

The Risk Spiral: One of the mandates of the GRC team is to organize risk management without introducing new risks in the environment. As GRC teams lag behind, there's a concern that the pressure of "doing more with less" inevitably changes the implicit risk acceptance of the organization. It starts to drift away from the explicit risk acceptance because remediation actions begin to lag, and new risks are not discovered, analyzed, or decided on in a timely manner, or at all. We all see this happening in GRC, yet the technology and processes haven’t changed significantly to pick up the slack. So, when teams are asked to cover more ground with fewer resources, it’s like a skeleton crew police force trying to patrol a whole city—they have to either cut corners and sacrifice effectiveness or skip whole neighborhoods to get it done, greatly increasing risk.

So, if the problem is that we simply cannot keep up. What can be done to fix it?

AI is particularly good for GRC capacity challenges

GRC is particularly well suited to be disrupted (for the better) by the advances in artificial intelligence. The GRC space has several specific qualities that make it a great candidate:

Complexities and requirements of regulations and frameworks are increasing much faster than the capacity of human teams. The way GRC teams approach new/changing frameworks and continuous compliance is generally to add people and add information to the collective intelligence of the organization.

“What If” Scenarios

Consider “What If” scenarios at large enterprises with 1,000+ controls. When they decide to make a material change to one of those controls, there are unknown downstream consequences to an unknown number of those 1,000+ other controls. It could take hundreds of human hours to sort through the complexity until reaching a point where all consequences have been satisfactorily mapped or the unknown consequences are tolerable. What would take humans hundreds of hours is trivial for powerful AI for GRC.

However, that approach is not linear. When more people are assigned to framework problems, returns degrade as the teams get larger and harder to manage. Each person added to a GRC team does not equal a +1 increase in productivity. Further, due to the highly dynamic nature of the environment (regulation + business, the GRC team may not always have the pertinent information (on time or at all) to properly evaluate the risk and consider the downstream effects of proposed mitigations.

That leads to wrong decisions (not making a decision can be a wrong decision by itself). Meanwhile, regulatory environments are running away at rates that are increasing each year. The current solution is expensive and unsustainable.

On the other hand, AI excels at aggregating and helping analyze vast amounts of data, correlating and helping us find patterns. AI can be used to handle that data and greatly reduce complexity (Trustero CISO Corner - The More You Grow, The Less You Know).

No one knows everything about the environment

GRC teams are the “great aggregators,” and they are expected to know everything about the business, but that knowledge is spread among different people, teams, and systems. It’s usually available, but often poorly organized and classified, even if specialized GRC solutions are implemented. This problem is particularly acute for large, distributed enterprises with complex product lines. AI is unmatched at ingesting and cross-referencing diverse information that is in an uncommon format; for humans, this is some of the most toilsome, frustrating, and error-prone work there is.

In GRC, the ratio of monotonous to strategic work is going in the wrong direction. If quality and structured data are available, specialized AI is incredibly efficient at handling repetitive tasks with high accuracy. The “quality” and "structured" pieces are non-trivial. Trustero customers tell us the ratio of satisfying work to grunt work has been going in the wrong direction for the last several years. This can lead to several critical problems, including:

- Dissatisfaction and a higher churn rate of highly qualified individuals. For example, answering questionnaires (the Siberia of the GRC organization) requires highly skilled individuals with knowledge of the field (SMEs) and the environment (veterans). The ROI of answering questionnaires is questionable, but few can do it effectively.

- High error rate. Psychologically, monotonous work increases the error rate - humans need variety. E.g., some countries have standards about the maximum length of a straight highway. Today, we combat error rates with peer reviews, but that just uses the capacity of another valuable person.

For most companies, “Continuous Compliance” isn't real or even realistic. Despite clever marketing, most mid-size companies and enterprises are not able to achieve true continuous control monitoring. Once they’ve reached a certain level of complexity, it’s far too resource-intensive to regularly evaluate and test large groups of their controls. And, until now, there have been no solutions flexible enough to automate the evaluation. So, the conventional approach has been to test controls during audits and infrequent assessments that give only partial assurance.

In monitoring their risk, organizations face a similar capacity problem. There is a need to analyze a vast and growing amount of data, and the typical solution is to attack it with more people. However, it’s typical that Risk organizations do not scale linearly with the broader company.

Additionally, as teams grow, the efficiency decreases because of the added bureaucracy and hierarchy.

With powerful AI for GRC, everything can be evaluated every day. With AI for GRC, continuous monitoring is actually possible, even with limited resources. Finally, there is an opportunity to “democratize” an approach that was cost-prohibitive for many organizations. At Trustero, this is called Control Assurance - daily examination of your entire GRC posture. It’s the “Full Self-Driving” of GRC.

What is AI for GRC?

AI for GRC represents a foundational change in the way many of the jobs in GRC are performed. The discipline is moving from a Workflow-driven world to an Ask-to-Action one.

Imagine you need to write a paper about an obscure topic you know very little about – the migration patterns of Antarctic birds. You used to go to the library. When you get there, you have two paths you can take. (1) You can work with the librarian, who can point you to several of the (potentially correct) books to study and go from there. Or, (2) you can sit down with the professor who wrote those books, who will guide you expertly until your paper is done.

Working with the librarian is how things have been done for years (workflows). They know their way around, but they have no domain knowledge or expertise of what’s in the books. The answers are out there, but you have to find them and build the necessary expertise to analyze them. It will take you some time to do so - sometimes a very long time - and you’ll make some errors along the way trying to cross-reference all the sources.

Working with the professor is AI for GRC. It means the complete knowledge of a domain is combined with the collective GRC knowledge of an organization – all in one place, organized, structured, and ready to enable AI actions that dramatically improve the efficiency and effectiveness of the GRC team.

AI for GRC v. LLMs in Workflows

AI for GRC is also different from LLM functionality that’s inserted into parts of a workflow. It’s not the ability to read a SOC 2 report and give you a summary, general guidance around a control, or even answer a security questionnaire. These are nice things to have, and they speed up certain parts of workflows. They are also all Trustero features - but they do not dramatically improve efficiency, they are not verticalized and specific, and they can be done by most generic LLMs. And, those generic LLMs are typically free. That’s why we give those features away for free at Trustero.com.

AI for GRC not only speeds up the steps within the workflows, it skips large parts of GRC workflows and sometimes skips them altogether, and finishes work autonomously.

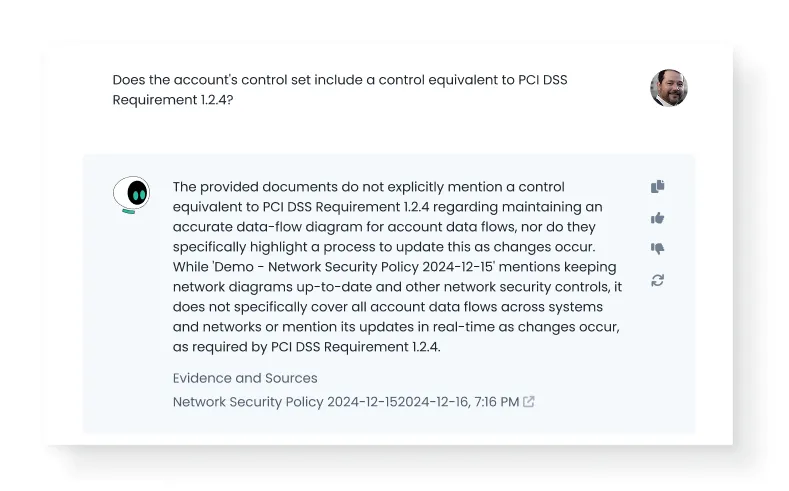

AI for GRC is defined by purpose-built, agentic functionality, plus actionable insights for GRC and adjacent teams. It’s advanced functionality that you cannot get with Notebook LM, Chat GPT, or any other general-purpose AI. With vast amounts of information (both internal and external), AI for GRC represents a shift in how businesses approach their GRC program as a whole, by handing over some human tasks fully to agentic processes and assigning a single starting point for all GRC issues. That point is where all analysis begins, it’s where the compliance team plans, guidance is given to control owners, reports are generated, and more. This signifies a departure from traditional, often siloed, and workflow approaches to GRC. In Trustero, this single starting point is in Trustero Intelligence, our AI assistant.

Accuracy in AI for GRC

Sometimes AI hallucinates or gets things wrong. In GRC, accuracy and truthfulness are paramount. But if humans have to constantly check the accuracy of AI work, then any efficiency gains are greatly reduced, if not lost altogether.

In high-risk spaces where truthfulness is critical, a better solution than constant fact checking is to prevent AI from ever guessing in the first place by:

- Limiting its context to well-established, proven, and internally consistent artifacts (Policies, Controls, Risks, Evidence, Audits, etc.) and externally (expertly curated GRC content). Trustero utilizes a patented Trust Graph and other AI tools to aid in the internal consistency.

- Require that it always cite its sources, link to those sources, and provide the reasoning behind the analysis, minimizing the guessing.

- Teach the AI that it’s okay to say “I don’t know,” or “I do not have enough information,” but it’s never okay to guess.

When AI is working solely from facts, citing its sources, providing reasoning, and is not prone to hallucination and guessing, users can focus on measuring how often the AI has an answer rather than how often it’s accurate. And, Trustero AI has answers about 95% of the time for customers with quality data.

Properties of AI for GRC:

- AI assistants and agents as the starting point for most GRC actions: Not all tasks are finished autonomously by agents, but nearly all are started and assisted by them.

- The single unified source of GRC Intelligence: AI for GRC acts as a comprehensive repository of an organization's GRC knowledge. It eliminates the need for individuals to navigate complex internal networks and question trees to find information.

- Holistic evaluation and testing in a fraction of the time: Today, this is the biggest area of time savings and effectiveness increase in AI for GRC. The ability to fully evaluate a GRC environment in a fraction of the time and do so regularly saves significant time on things like policy design and unlocks true Continuous Control Monitoring.

- Evaluation and testing at any interval: Because of the speed of testing, organizations can test regularly, spotting issues as they arise instead of at audit time.

- Complete confidence in the AI from GRC citations and links: AI inferences and answers come with citations to GRC data and links in the system.

- Agentic functionality tuned for GRC: Entire tasks or significant parts of tasks are given entirely to an agent, creating significant time and cost savings.

- Integrations into GRC platforms: Plus a deep understanding of GRC data structure.

- GRC teams have the ability to work in a highly distributed and complex environment: AI for GRC empowers teams to effectively navigate highly distributed and complex environments, streamlining processes and ensuring consistency across diverse operations.

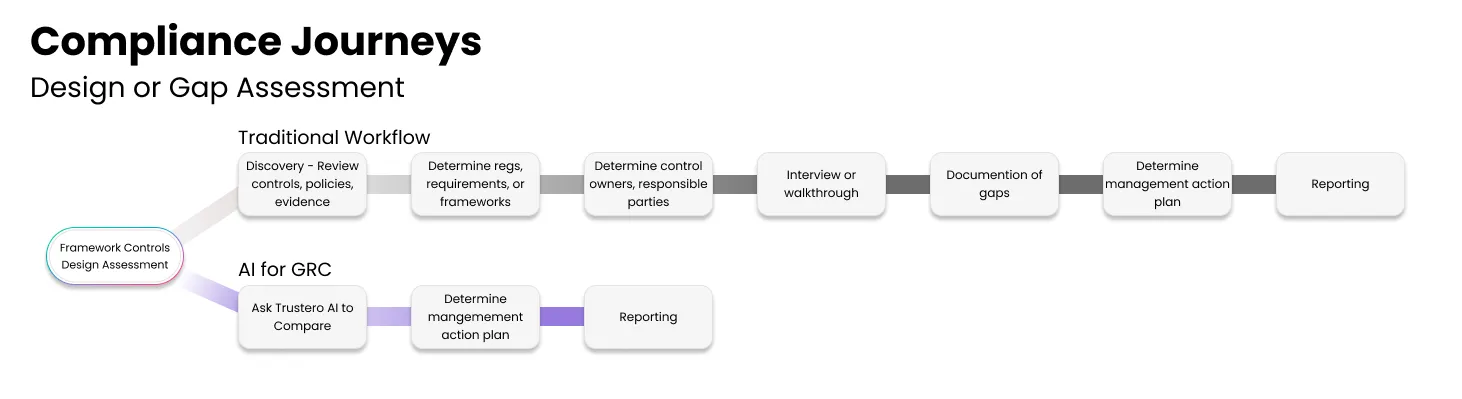

Real Stories: Hypercomplex Design Gap Assessment for a Large Financial Institution

A large financial institution was facing the complicated work of planning for FFIEC, consisting of over 100 regulations. This was complicated because they already had hundreds of pages of policy text, and was further complicated by the fact that they had just merged with another organization. The GRC leaders estimated that it would take them “thousands of hours” to complete the design gap assessment needed just to plan the work for FFIEC. Trustero AI is able to do hypercomplex design gap assessment work in minutes it is set up and receives quality data.

How it’s measured

The true value of AI for GRC Programs and platforms is defined by their tangible impact on an organization. This impact can be measured across several key dimensions:

Efficiency and speed of tasks - Measured by time and money saved. Agentic AI solutions that complete substantial portions of compliance tasks lead to time savings measured in multiples. These tools act not just as helpers, but as intelligent collaborators that offload the most repetitive and structured GRC work.

- Example 1: A SaaS provider using Trustero Intelligence reduced internal audit preparation time from 3 weeks to 1 day, eliminating the need for extensive cross-departmental coordination.

- Example 2: An enterprise-scale financial services client cut their evidence collection and analysis cycle by 75%, replacing weekly manual follow-ups with daily automated assurance checks.

- Example 3: A cloud-native company preparing for global expansion used pre-audit evaluations to skip conventional workflows and accelerate readiness for multiple frameworks (SOC 2, ISO 27001, GDPR) from 9 months to under 90 days, saving hundreds of staff hours and consultant fees.

Accuracy Improvements - Measured by reductions in mistakes and gaps. Humans are prone to error, whether in missed evidence, incomplete control mapping, or misinterpretation of framework requirements. AI dramatically improves precision and completeness, acting like "spell check for compliance" and helping teams get it right the first time.

- Example 1: Automated gap analysis revealed 42 previously unidentified control weaknesses during an internal readiness review, reducing the likelihood of external audit findings.

- Example 2: Trustero's Control Assurance flagged configuration drift in cloud access policies that manual reviews had overlooked for 6 months.

- Example 3: A mid-market tech firm reduced audit findings by 80% year-over-year after implementing AI-driven controls optimization and continuous control testing.

Effectiveness of GRC workstreams - Measured by outcomes, consistency, and risk mitigation. AI doesn’t just make existing work faster—it makes it smarter. AI for GRC enhances the effectiveness of compliance efforts by applying consistent logic, learning from historical data, and ensuring workstreams align with the latest standards.

- Cross-functional compliance activities are no longer siloed, improving coordination between legal, IT, security, and operations.

- Real-time alerts and remediation suggestions reduce the mean time to compliance gap closure.

- Risk assessments become iterative and context-aware, not checklist-driven.

New internal capabilities - Measured by money saved and new opportunities unlocked. AI unlocks capabilities that were previously impractical due to human bandwidth, cost, or organizational complexity:

- Increased evaluation frequency and scope: Organizations can now conduct continuous auditing across 100% of their systems, not just a sample.

- Self-assessment empowerment: Business units can validate their own compliance without waiting for central reviews, speeding innovation while maintaining assurance.

- GRC copilot for every team: From HR to DevOps, teams can now access instant, expert-level GRC guidance without needing to escalate to compliance leadership.

- Holistic visibility: Leadership gains a real-time, unified view of the organization’s compliance status across geographies, subsidiaries, and frameworks.

- Knowledge democratization: Institutional GRC knowledge is no longer locked in a few expert minds—it’s codified and accessible to anyone in the organization.

Better Risk Posture - Known risks are managed better, and organizations get better at discovering unknown risks. Plus, the team has the capacity to analyze them faster and test mitigations. Measured by:

- Increase in proactive risk identification: Measure the number of previously unknown risks identified by AI analysis compared to prior periods.

- Time savings in risk analysis: Quantify the reduction in person-hours required for risk analysis activities due to AI-driven automation.

- Acceleration of mitigation testing: Measure the decrease in the average time taken to test and validate risk mitigation strategies with AI support.

- Improvement in risk awareness scores: Assess changes in risk awareness across the organization through surveys or training completion rates related to AI-identified risks.

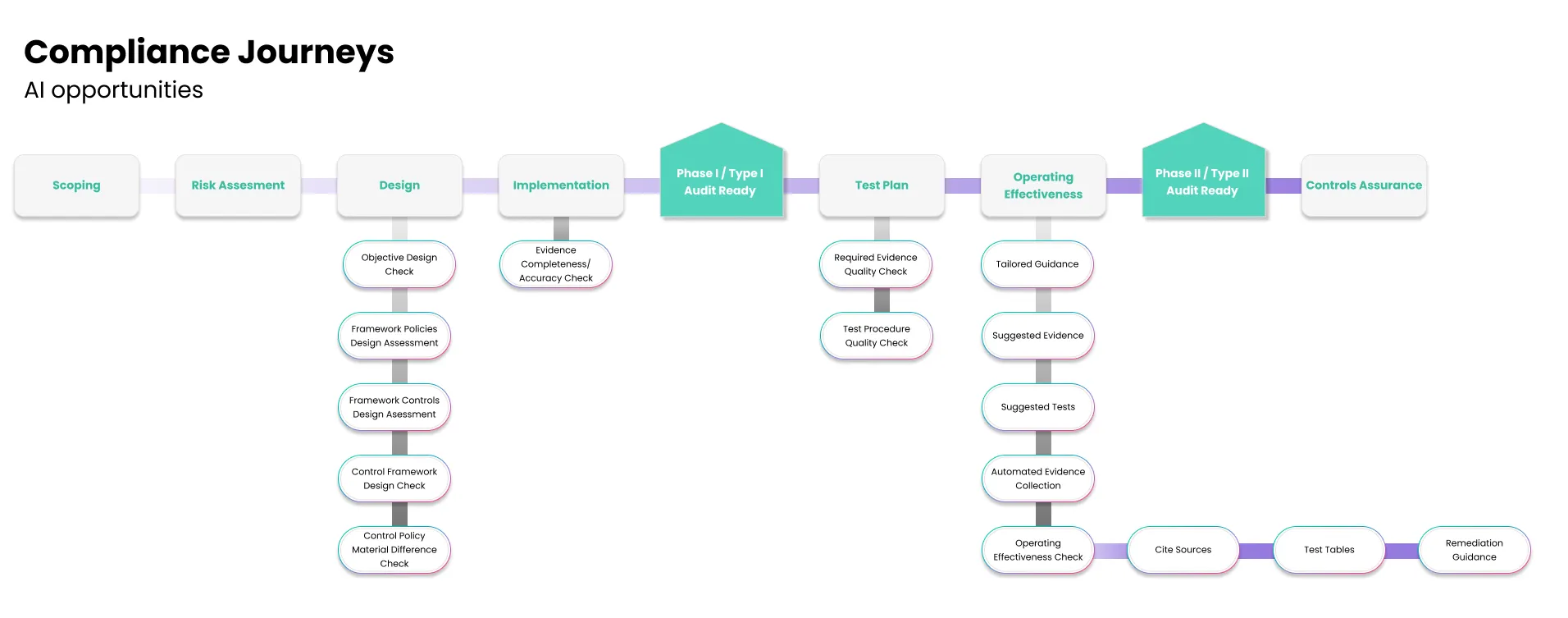

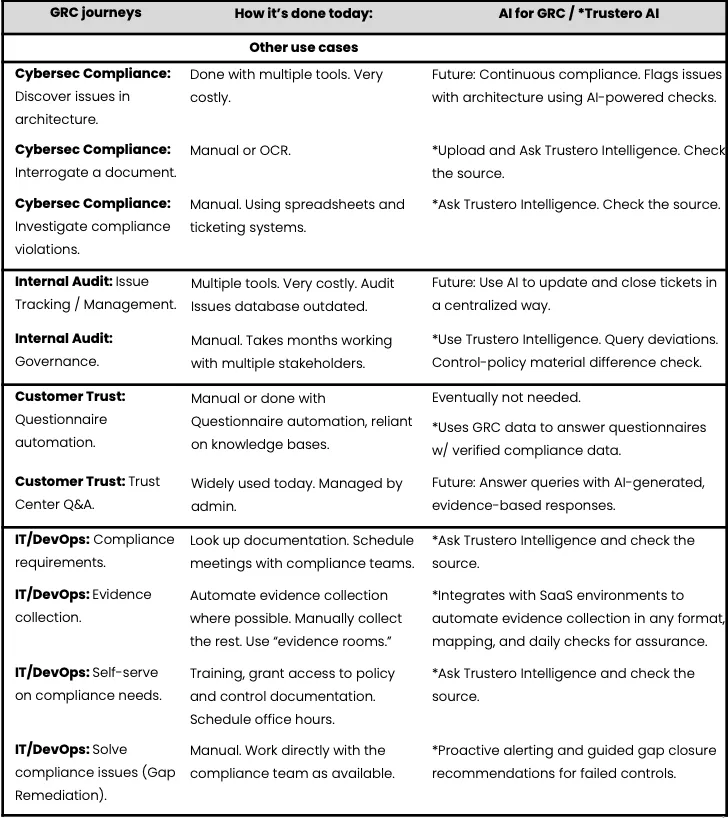

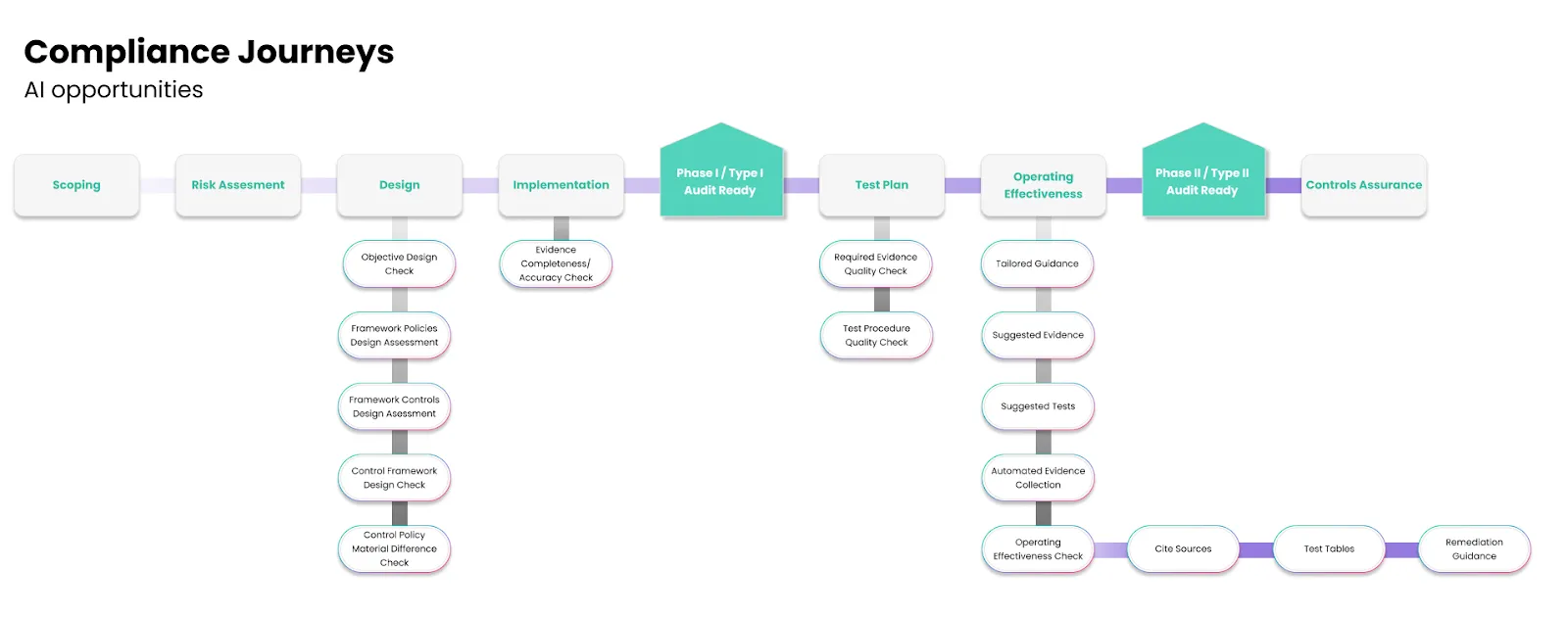

What can AI for GRC do?

AI for GRC is not one-size-fits-all. Different roles across the organization have unique compliance challenges, and agentic AI meets each where they are. Below is a structured view of how AI for GRC and Trustero AI empowers key stakeholders with intelligent, role-specific support. The tables below lay out specific use cases for AI for GRC and how they will be impacted or are being impacted already. Items marked with an asterisk (*) represent current Trustero AI capabilities.

What does the AI-enabled GRC practitioner look like?

The AI-enabled GRC practitioner represents a shift from compliance administrator to strategic orchestrator of risk and governance. In the past, GRC professionals spent the majority of their time on mundane compliance tasks - chasing evidence, formatting audit documents, and managing manual workflows. AI radically transforms that role by removing the burdens of repetitive, artifact-centric work and enabling practitioners to focus on higher-order thinking, risk management, communication, and strategy.

The goal of AI in GRC is not to replace the human—it is to free the human to do the work that truly matters: mitigating risk, advising the business, and designing resilient, trustworthy systems.

Core characteristics of the AI-Enabled GRC practitioner:

- Risk-focused: No longer bogged down by the mechanics of compliance, these practitioners use AI insights to prioritize and act on real organizational risks. With access to all available information at their fingertips, they become true risk analysts and trusted business advisors.

- Tech-savvy, not technical: They understand how AI works in context, leveraging tools like Trustero without needing to code. They navigate systems, extract insights, and guide outcomes.

- AI-augmented strategist: Uses AI to monitor controls, interpret frameworks, and evaluate evidence in real time, moving from snapshot compliance to true continuous assurance.

- Architect and analyst: Uses AI to summarize, refine, or realign policies and controls with emerging regulations. Identifies drift, contradictions, and redundancies before they become liabilities. Information inquiry turnaround times are much shorter.

- Communication powerhouse: Crafts AI-generated executive summaries that translate control-level data into strategic clarity for stakeholders, boards, and partners.

- Contextual expert: Ensures that AI-generated insights are aligned with business objectives, cultural considerations, and regulatory realities. The AI offers clarity—the human provides judgment.

- Happier person: The AI-enabled GRC practitioner does not perform mundane, repetitive tasks, sees more value added from their efforts, and has higher satisfaction from their role.

What changes in daily work?

- Artifact wrangling is reduced or eliminated. Evidence gathering and analysis are automated, controls are monitored in real time, and issues are addressed as they arise.

- Instead of preparing for and attending status update meetings, practitioners review AI-generated dashboards and reports, focusing on what matters.

- Instead of spending hours rewriting a policy, they use AI to draft, revise, and align it in minutes.

- Instead of tracking down SME answers, they ask their AI assistant, Trustero Intelligence, and validate the result.

- Instead of constantly organizing and maintaining data and artifacts, practitioners use them to gain valuable insights.

The AI-enabled GRC practitioner is more strategic, more empowered, and more valuable than ever. As organizations adopt AI for GRC, these professionals will lead the charge, shaping smarter risk management practices, enabling faster business growth, and embedding compliance into the culture of the enterprise.

This is not a replacement for expertise—it is an amplification of it. The future of GRC belongs to those who pair their insight with intelligence.

Real-World Use Cases and Industry Insights

At Trustero, we’ve observed that AI brings the most immediate and transformative value to GRC teams in five core areas. These are not hypothetical scenarios—they are active, real-world use cases that Trustero Intelligence powers for organizations every day:

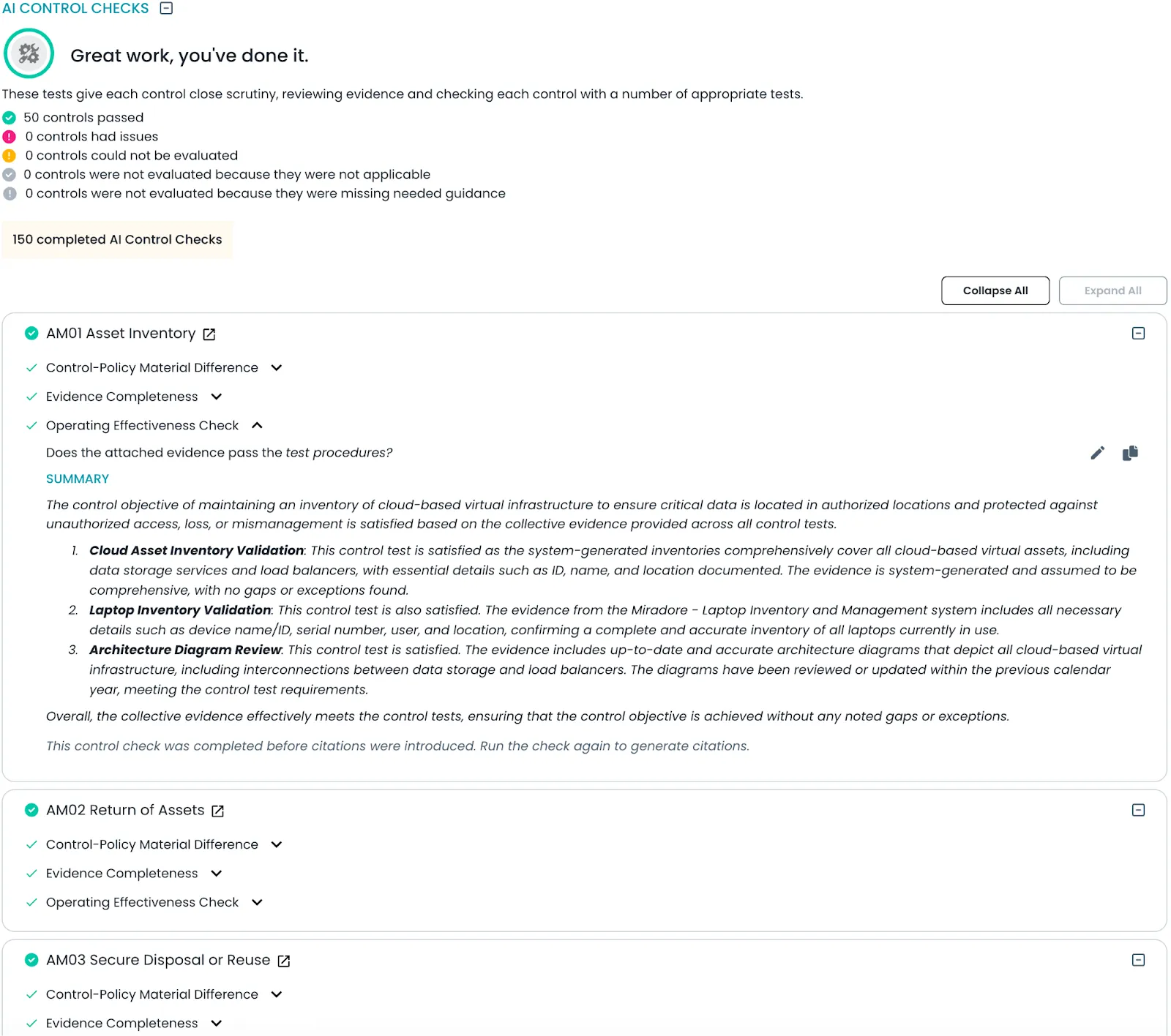

1. Control Operating Effectiveness Checks

AI automates the process of reviewing control test procedures against their intended objectives. Instead of manually comparing documentation and execution, AI rapidly scans and analyzes whether each control is being tested appropriately and if the execution aligns with the original design. This means GRC teams can quickly pinpoint where controls may be operating in name only, identify gaps between what’s documented and what’s actually happening, and address issues before they become audit findings. By continuously monitoring control effectiveness, organizations can maintain a stronger compliance posture and reduce the risk of control failures going undetected.

2. Policy Design Assessment

Ensuring that company policies fully address all relevant framework requirements is a complex, ongoing challenge—especially as regulations evolve. AI assists by mapping policies directly to the applicable standards (such as ISO 27001, SOC 2, or GDPR), highlighting any areas where requirements are overcommitted, under-addressed, or missing entirely. This targeted assessment helps organizations avoid unnecessary obligations while closing critical gaps, so policies remain both efficient and defensible. The result is a policy set that is always aligned with current frameworks, reducing the risk of non-compliance and streamlining future audits.

3. Evidence Validation

One of the most time-consuming aspects of GRC is collecting, validating, and maintaining audit evidence. AI for GRC takes a proactive approach: it scans across systems and documentation to flag evidence that is outdated, missing, or insufficient—long before an auditor requests it. This early detection enables teams to remediate issues in real time, reducing scramble and stress during audit cycles. Automated evidence validation also minimizes the risk of failed audits due to incomplete documentation, and ensures that compliance artifacts are always up to date and ready for review.

4. Dynamic Risk Register

Traditional risk registers are often filled with generic threats and can quickly become stale or irrelevant. Trustero’s AI analyzes your actual technology stack, business processes, and regulatory context to surface risks that are truly relevant to your organization. It identifies gaps in the risk register—such as threats that aren’t tied to any controls or emerging risks based on recent changes in your environment. This context-aware approach ensures that risk management efforts are focused on real exposures, not just theoretical ones, and that controls are mapped to the most pressing threats facing your business.

5. Automated AI Report Generation

GRC professionals spend significant time transforming technical documents, risk logs, and audit histories into clear, actionable reports for executives and boards. AI streamlines this process by generating concise, board-ready summaries from complex documentation. Whether it’s distilling hundreds of pages of policy into a one-page executive brief or summarizing risk trends for quarterly reporting, AI ensures that leadership receives the insights they need—without the manual rework. This allows teams to communicate compliance status, risk posture, and key findings with greater clarity and speed.

These aren’t theoretical use cases—they’re live in Trustero today. By automating and enhancing these critical areas, AI empowers GRC teams to operate more efficiently, reduce manual workload, and focus on strategic risk management rather than repetitive, low-value tasks.

Cross-Industry Patterns We’re Seeing

- Fastest Time-to-Value = Evidence + Effectiveness. Teams that start with Evidence Validation and Control Operating Effectiveness hit lift first—because they reduce scramble and audit churn before tackling broader risk automation.

- Policy Debt is Real. Policy Design Assessment consistently surfaces over-commitment (promising more than you can prove) and under-coverage (gaps against ISO/SOC/GDPR). Fixing both reduces audit findings and contract exceptions.

- Register Reality Check. AI-driven Dynamic Risk Register replaces generic risks with stack-aware items (cloud configs, vendor paths, data flows), raising the signal-to-noise ratio of risk reviews.

- Narratives Unlock Decisions. Narrative Summarization is the bridge to execs and boards—clear, cited, one-page briefs compress weeks of meetings into one readout.

- Continuous > Periodic. Daily/weekly AI checks (your Control Assurance) catch drift early and convert “point-in-time compliance” into rolling assurance without headcount creep.

- Quality In, Compounding Out. Clean policy/control metadata and mapped systems create compounding AI benefits—every new framework or product leverages the same Trust Graph context.

Industry Snapshots & What’s Working Now

Financial Services (Banking, FinTech, Payments)

- Biggest quick win: AI control effectiveness on access management, change management, and vendor controls tied to FFIEC/SOX/PCI families.

- Observed impact: Fewer late findings during internal audits; faster RCSA cycles with stack-specific risks instead of generic “cyber” entries.

- What to watch: Mapping to operational-resilience expectations (impact tolerances, severe-but-plausible scenarios) using Narrative Summarization for board packs.

Healthcare & Life Sciences

- Biggest quick win: Evidence Validation for HIPAA/PHI safeguards and data-sharing workflows; AI flags stale BAAs and missing privacy attestations.

- Observed impact: Fewer privacy exceptions during partner reviews; faster questionnaire turnarounds to win integrations.

- What to watch: Linking clinical/research systems to policy design so AI can prove “minimum necessary” and data lineage in one click.

SaaS / Cloud Platforms

- Biggest quick win: AI-assisted policy design aligned to SOC 2 + ISO 27001 (plus regionals like GDPR) and auto-mapping to IaC/cloud controls.

- Observed impact: Pre-audit readiness drops from months to weeks; security questionnaires answered by AI drafts that SMEs only validate.

- What to watch: Continuous drift detection on multi-cloud posture feeding risk register gap remediation.

Manufacturing & Supply Chain

- Biggest quick win: Vendor-risk evidence validation (certs, pen tests, SLAs) and threat identification around OT/IT interfaces.

- Observed impact: Reduction in aged vendor exceptions; proactive alerts on control gaps tied to logistics partners.

- What to watch: Policy harmonization across plants and regions—AI normalizes controls so global audits stop re-finding the same issues.

Retail & eCommerce

- Biggest quick win: Control effectiveness on payments and customer-data flows; AI detects config drift that breaks PCI/PII promises.

- Observed impact: Fewer cart-blocking emergencies from last-minute audit fixes; smoother partner assessments for marketplace integrations.

- What to watch: Seasonal change bursts—AI checks push-to-prod changes against policy before peak traffic.

Energy & Utilities

- Biggest quick win: AI mapping of asset inventories to required controls; narrative summaries convert technical posture into regulator-ready briefs.

- Observed impact: Shorter compliance evidence cycles; clearer accountability across operators and third parties.

- What to watch: Linking incident playbooks to AI so lessons learned automatically update risks and tests.

Public Sector & GovTech

- Biggest quick win: Policy design assessment against baseline frameworks; evidence validation on access/logging for data sensitivity tiers.

- Observed impact: Faster ATO/authority reviews; reduced rework on control narratives.

- What to watch: Explainability—Trustero’s citations and reasoning trails become the artifact regulators ask to see.

Pharma / MedTech

- Biggest quick win: AI-assisted alignment of quality, clinical, and IT controls; narrative summaries for submission-adjacent audits.

- Observed impact: Fewer late CAPAs due to proactive evidence gaps; cleaner trial-data handling narratives.

- What to watch: Cross-walking product lifecycle controls with enterprise security to eliminate audit “ownership” gaps.

Trustero AI and the future of AI for GRC

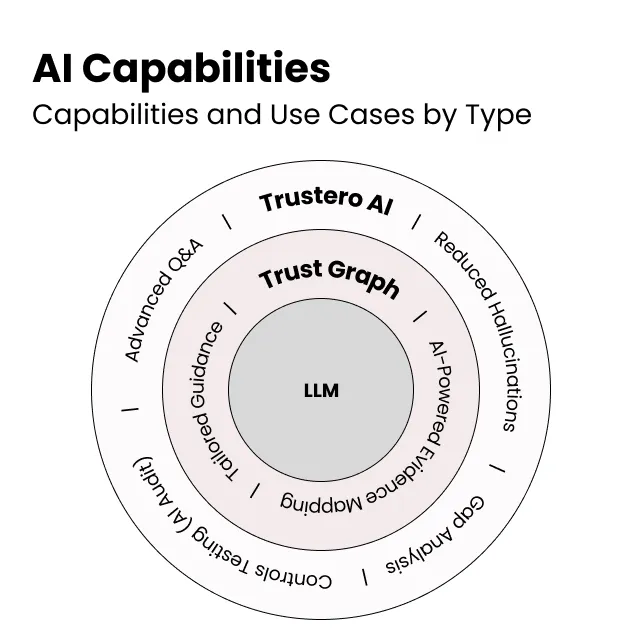

Trustero AI is an AI purpose-built for GRC. Trustero AI for GRC shares abilities we all come to expect with LLMs and agentic AI. This includes general knowledge about the world and regulatory environments, the ability to make inferences, summarize large volumes of information, and direct AI agents to conduct research and take corrective action. In addition to these general purpose abilities, Trustero AI reduces hallucinations for agentic GRC tasks, collects and organizes a business’s GRC data, conducts gap analysis across policies, controls, and frameworks, makes remediation recommendations relevant for all parties involved in the GRC process, conducts continuous audits across all of a business’s obligations flagging risks, answers employee questions about their GRC obligations, and more. These GRC-specific AI abilities extend general-purpose AI to help solve GRC native problems typically requiring human judgment.

The breadth of the problems AI for GRC helps solve requires a new way of user interaction, one that is goal-oriented, unbound by pre-programmed workflows. Users interact with traditional enterprise SaaS using a workflow user interface where the user selects from a menu of choices. These choices land on web forms or step-by-step ‘wizards’. This type of workflow user interface works well to accomplish pre-established programs, but effectively boxes AI into a workflow. An AI-native user interface is flexible to solve an unlimited number of problems, helping users quickly accomplish their goals. An AI-native user interface simulates a human co-worker operating on the other end of a communications medium.

Trustero AI uses a dynamic canvas where the AI determines the relevant information shown in the canvas. Trustero AI users use natural language to work with AI for GRC to accomplish a task. AI for GRC will respond with natural language and relevant forms, reports, graphs, pointers, etc. This allows the user a necessary natural flow to work with an AI partner.

All businesses invest in protecting their data (including GRC data) and in preventing it from being used in training general-purpose LLMs. Training private LLMs for GRC purposes is an expensive project with no guaranteed outcomes. Trustero AI ingests a business's GRC data on a recurring frequency and stores the data in its specific tenant. The ingested GRC data is organized using AI to provide accuracy for future Trustero AI queries. The GRC data can be ingested from a diverse range of sources, including traditional GRC platforms, cloud providers, SaaS, shared data stores, APIs, and more. Trustero AI will inquire the user about various data sources and automatically ingest the necessary data to perform the AI task.

LLMs are continuously improving in their inference and research capabilities. Trustero AI leverages the latest LLM ability to analyze and perform multi-step analysis and provides capabilities such as preventing hallucinations, conducting complex analysis, and solving specific GRC problems where LLMs fall short.

When data is ingested into Trustero, AI is used to connect related information. This connection forms a trust graph that is subsequently consulted to limit the context of LLM operations. Further, Trustero AI agents further reduce the relevant data context based on the task. The combination of context reduction is found to greatly reduce hallucinations.

One of the most complex GRC tasks is conducting an audit to determine whether a business is fulfilling a regulatory obligation. Trustero AI provides an audit AI agent that forms a plan to test an individual and a related set of controls. Each control test agent further breaks down complex natural language test procedures into multiple LLM inference steps. Each LLM inference step provides detailed context, allowing the test to complete with a low hallucination rate. The audit AI agent formulates an opinion as to whether the business fulfilled its tested obligation based on the sequence of tests conducted.

AI for GRC offers a transformative approach, moving beyond traditional workflows to a model where data-driven insights and automation drive efficiency, accuracy, and effectiveness. By empowering practitioners with tools that reduce mundane tasks, AI will elevate strategic thinking, risk mitigation, and communication, enabling continuous compliance, improved risk posture, and enhanced internal capabilities. Ultimately, AI for GRC will not just be an improvement, but a complete revolution, transforming compliance from a checkbox exercise to a core, strategic advantage that builds trust, resilience, and accountability.

AI/LLM safety

When discussing the security and safety of an AI solution, there are generally two classes of concerns: traditional security and AI-related security.

Traditional Security

Trustero’s approach to traditional security is anchored in three key philosophies: Zero Trust, Security by Design, and Risk-Based Security Management. These principles guide our strategy to safeguard customer data, ensure regulatory compliance, and maintain business resilience in an evolving threat landscape. The Zero Trust framework operates under the principle of “never trust, always verify,” assuming threats can originate both inside and outside. Accordingly, Trustero AI implements strict access controls, continuous authentication, and data isolation across tenants. Developers, for instance, access repositories and cloud environments using role-based access controls enforced through identity federation and multi-factor authentication (MFA).

Security by Design is ingrained in the development lifecycle, incorporating secure coding practices, threat modeling, and automated security testing (SAST/DAST) into the CI/CD pipeline. Entry points are engineered with least privilege principles and fortified by robust input validation, rate limiting, and OAuth 2.0-based authentication, effectively minimizing the attack surface from day one. Trustero also maintains strict policies around vulnerability scanning and timely patching.

Complementing this, the Risk-Based Security approach ensures that resources are allocated where they deliver the highest impact. Using frameworks like MITRE ATT&CK and NIST CSF, Trustero continuously assesses and prioritizes threats based on exploitability and potential business impact. Additionally, Trustero evaluates and replaces third-party components prone to vulnerabilities to maintain a strong security posture.

AI-related security

On the other hand, AI-related security is a newer and rapidly evolving domain with active research and emerging practices. While some techniques can be adapted from traditional security, unique challenges require tailored solutions.

Trustero AI, for example, relies heavily on customer context, such as GRC artifacts including policies and controls, and employs several measures to ensure data quality and trustworthiness. Role-based access controls and minimized exposure to non-authenticated data entry points are foundational, with authenticated sources considered inherently more reliable. During indexing and categorization, Trustero decomposes and inspects artifacts; any anomalies will be excluded from contextualization. Trustero’s patented Trust Graph is used to categorize and correlate documents, and Trustero AI, already trained with general GRC knowledge, has predefined expectations about artifact structure and language. For example, control documents must meet specific criteria and contain standardized language; otherwise, they are flagged during the control test.

Beyond these practices, Trustero addresses more complex AI-related risks like inaccuracies, inconsistencies, and hallucinations—critical concerns in high-trust applications. Trustero carefully assesses workflows to determine the optimal blend of AI, traditional algorithms, or both. LLMs may excel in some areas, while traditional methods offer better reliability elsewhere, and a hybrid approach often produces the best results.

Randomness could be reduced significantly if true/false conclusions are clearly distinct. Clarifying data context and minimizing prompt ambiguity are highly effective, which is why Trustero publishes best practices for authoring artifacts like policies, controls, and test documents, along with guidance on evidence requirements.

To combat hallucinations, Trustero utilizes techniques such as prompt engineering, reducing the scope, providing context, and asking for reasoning and references. Trustero AI will not fabricate an answer; if data is insufficient or inconclusive, it will clearly state this and explain why. Our system emphasizes explainable reasoning—each conclusion is accompanied by transparent references to the data used. If a definitive answer cannot be provided, the AI offers diagnostic feedback and suggestions for remediation.

Trustero strongly believes in keeping a human in the loop, and our focus remains on enabling experts to efficiently verify AI-generated outputs both factually and logically.

Conclusion: The future of GRC starts here

AI for GRC is not a futuristic concept — it’s a practical and already operational approach that transforms the way organizations understand, manage, and act on compliance and risk. AI for GRC represents a fundamental paradigm shift in how organizations will approach Governance, Risk, and Compliance – moving from a workflow-driven model to one where quality data is connected to the AI for GRC and all actions begin with a question or a prompt.

GRC is uniquely positioned for the AI revolution—giving practitioners a rare chance not just to catch up with other functions and shed the reputation of being a bottleneck, but to lead by example in driving AI-powered business transformation.

The traditional GRC model — reliant on human-intensive processes, static documentation, and periodic assessments — is no longer sustainable. The stakes are too high, and the complexity too great. Meanwhile, the expectations of boards, regulators, customers, and partners are only rising. With AI, GRC can finally scale. Exponentially.

Compliance is evolving — from stamp to substance

For too long, many organizations have approached compliance as a box to check — a set of attestations to collect, an audit to survive, a framework to follow just enough to pass. It’s been the price of entry to markets, a sales-enabling tool more than a true business function.

But the future is different, and AI is the catalyst.

With AI for GRC, compliance transforms into something deeper: a real-time roadmap for risk mitigation. Frameworks are no longer treated as bureaucratic obstacles, but as structured guides to building resilient, secure, and trustworthy systems.

This shift moves us beyond compliance for compliance’s sake, and toward compliance as strategic infrastructure. AI elevates the level of rigor and responsiveness in compliance programs, making risk management the primary outcome, not a hopeful side effect.

And when compliance becomes about actually mitigating risk, not just saying you did, it earns back the credibility and respect it has too often lost.

What happens next?

Organizations that embrace AI for GRC today will:

- Lead in regulated markets with confidence

- Move faster while staying aligned with evolving requirements

- Reduce the cost of compliance in time, money, and morale

- Make better decisions, with clearer insight and less friction

- Build cultures of trust, resilience, and accountability

- Treat compliance not as a box-checking exercise, but as a real and repeatable path to risk reduction

Those who wait risk falling behind, trapped in workflows that can’t keep up with the business they’re trying to support.

A new role for GRC leaders

In this new era, GRC professionals are not just rule enforcers or document managers. They are strategic advisors, systems thinkers, and AI operators. They drive clarity, consistency, and control across the entire organization.

This is what the AI-enabled GRC practitioner looks like:

- Equipped with tools that think and reason

- Focused on outcomes, not checklists

- Embedded in decision-making, not isolated from it

Scaling their impact without scaling their team

At Trustero, we’re building this future with our customers and for the entire industry.